AMD says its Instinct MI500 AI Accelerator will come in 2027 — but is it too late with Nvidia set to introduce Vera-Rubin in 2026?

AMD MI500 is planned around AMD’s CDNA 6 architecture with 2nm manufacturing and HBM4E memory

- AMD targets MI500 launch in 2027 as Nvidia prepares Vera-Rubin a year earlier

- CES 2026 shows growing gap between AMD and Nvidia AI accelerator timelines

- AMD expands AI portfolio while next generation hardware remains a year out

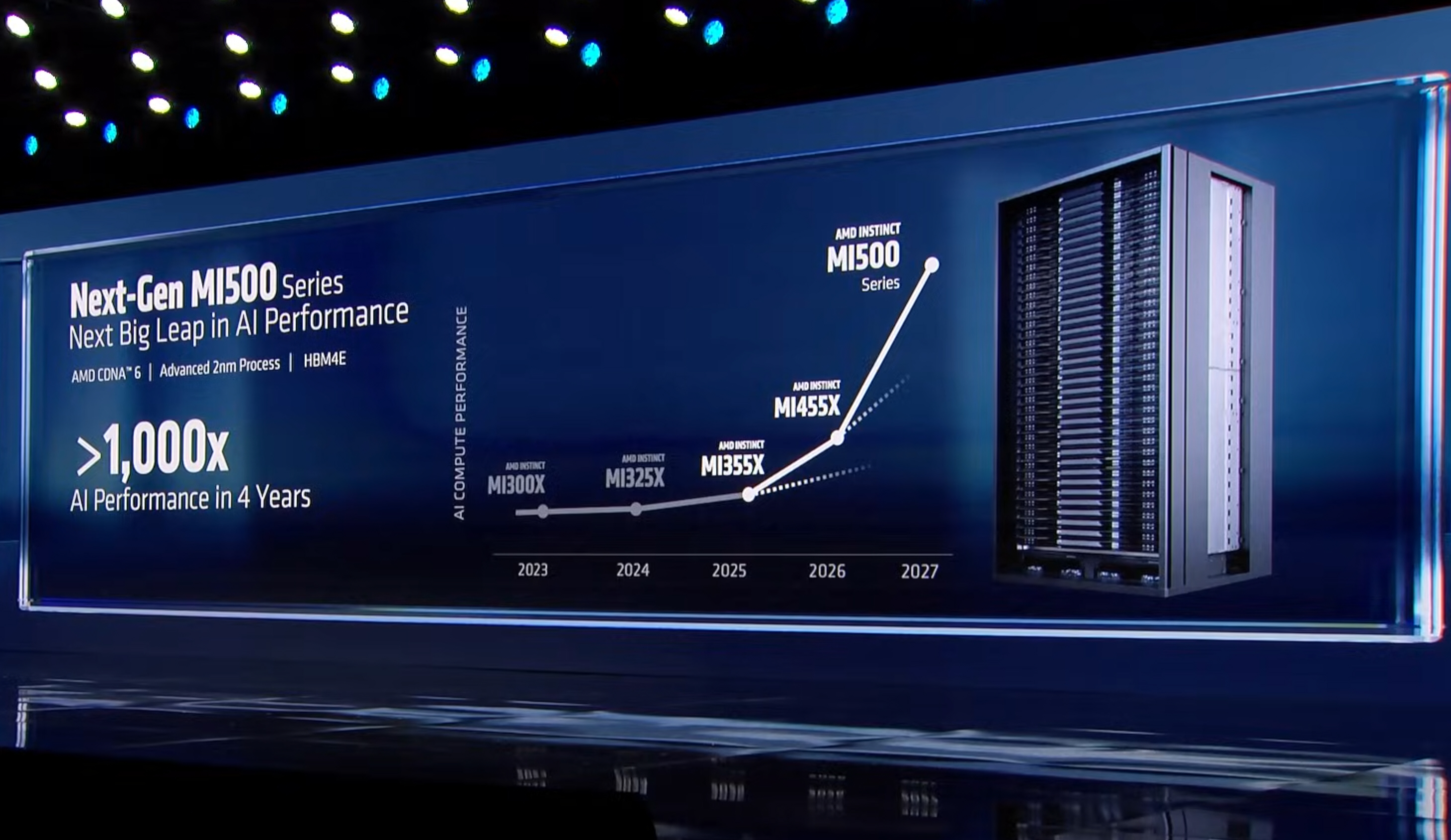

At CES 2026, AMD discussed its near and longer term AI hardware plans, including a preview of the Instinct MI500 Series accelerators expected to arrive in 2027.

The company used the show to present an early look at Helios, a rack-scale platform built around Instinct MI455X GPUs and EPYC Venice CPUs. Helios is positioned as a blueprint for very large-scale AI infrastructure rather than a shipping product.

AMD also introduced the Instinct MI440X, a new accelerator aimed at on-prem enterprise deployments, designed to fit into existing eight GPU systems for training, fine-tuning, and inference workloads.

Nvidia Vera-Rubin also on the way

More interesting for many industry watchers, however, is what comes next. AMD said the Instinct MI500 Series is planned for launch in 2027 and will deliver a significant jump in AI performance compared with the MI300X generation.

MI500 is expected to use AMD’s CDNA 6 architecture, a 2nm process, and HBM4E memory.

AMD claims the design is on track to deliver up to a 1,000x increase in AI performance over MI300X, although because it’s still a way off, no detailed benchmarks were shared.

As exciting as this might be, the timing is awkward for AMD because Nvidia is preparing to introduce its Vera-Rubin platform this year.

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

At CES 2026, Nvidia also detailed its replacement for Grace-Blackwell rack-scale designs - the Vera-Rubin platform is built from six new chips designed to operate as a single rack-scale system.

These include the Vera CPU, Rubin GPU, NVLink 6 switch, ConnectX-9 SuperNIC, BlueField-4 DPU, and Spectrum-6 Ethernet switch.

In its NVL72 configuration, the system combines 72 Rubin GPUs and 36 Vera CPUs connected through NVSwitch and NVLink fabric to operate as a shared-memory system.

Nvidia says Vera-Rubin NVL72 systems cut inference cost per token for mixture-of-experts models by 10x and reduce the number of GPUs needed for training by four times.

Rubin GPUs use eight stacks of HBM4 memory and include a new Transformer Engine with hardware-supported adaptive compression, which is intended to improve efficiency during inference and training without affecting model accuracy.

Rubin-based systems will be available from partners in the second half of 2026, including NVL72 rack-scale systems and smaller HGX NVL8 configurations, with deployments planned across cloud providers, AI infrastructure operators, and system vendors.

By the time AMD’s Instinct MI500 Series arrives in 2027, Nvidia’s Vera-Rubin platform is expected to be available from partners and in use at scale.

TechRadar will be extensively covering this year's CES, and will bring you all of the big announcements as they happen. Head over to our CES 2026 news page for the latest stories and our hands-on verdicts on everything from wireless TVs and foldable displays to new phones, laptops, smart home gadgets, and the latest in AI. You can also ask us a question about the show in our CES 2026 live Q&A and we’ll do our best to answer it.

And don’t forget to follow us on TikTok and WhatsApp for the latest from the CES show floor!

Wayne Williams is a freelancer writing news for TechRadar Pro. He has been writing about computers, technology, and the web for 30 years. In that time he wrote for most of the UK’s PC magazines, and launched, edited and published a number of them too.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.